WoMAP: World Models For Embodied

Open-Vocabulary Object

Localization

We introduce World Models for Active Perception (WoMAP), a recipe for training open-vocabulary object localization policies that are grounded in the physical world.

Language-instructed active object localization remains a critical challenge for robots, requiring efficient exploration of partially observable environments. However, state-of-the-art approaches either struggle to generalize beyond demonstration datasets (e.g., imitation learning methods) or fail to generate physically grounded actions (e.g., VLMs). To address these limitations, we introduce World Models for Active Perception (WoMAP): a recipe for training open-vocabulary object localization policies that: (i) uses a Gaussian Splatting-based real-to-sim-to-real pipeline for scalable data generation without the need for expert demonstrations, (ii) distills dense rewards signals from open-vocabulary object detectors, and (iii) leverages a latent world model for dynamics and rewards prediction to ground high-level action proposals at inference time. Rigorous simulation and hardware experiments demonstrate WoMAP's superior performance in a broad range of zero-shot object localization tasks, with more than 9x and 2x higher success rates compared to VLM and diffusion policy baselines, respectively. Further, we show that WoMAP achieves strong generalization and sim-to-real transfer on a TidyBot.

We introduce a scalable real-to-sim-to-real data generation pipeline that utilizes only a few real-world videos to train Gaussian Splats, render novel observations from sampled camera positions, and annotate each observation with a pretrained object detector to offer dense training signals.

We propose a world model architecture that combines a latent dynamics model with a reward model to predict and evaluate expected rewards given actions, thereby grounding high-level action proposals in the physical world. A central innovation of our pipeline is the use of dense reward distillation from open-vocabulary object detectors, which allows for data-efficient training without relying on image reconstruction objectives.

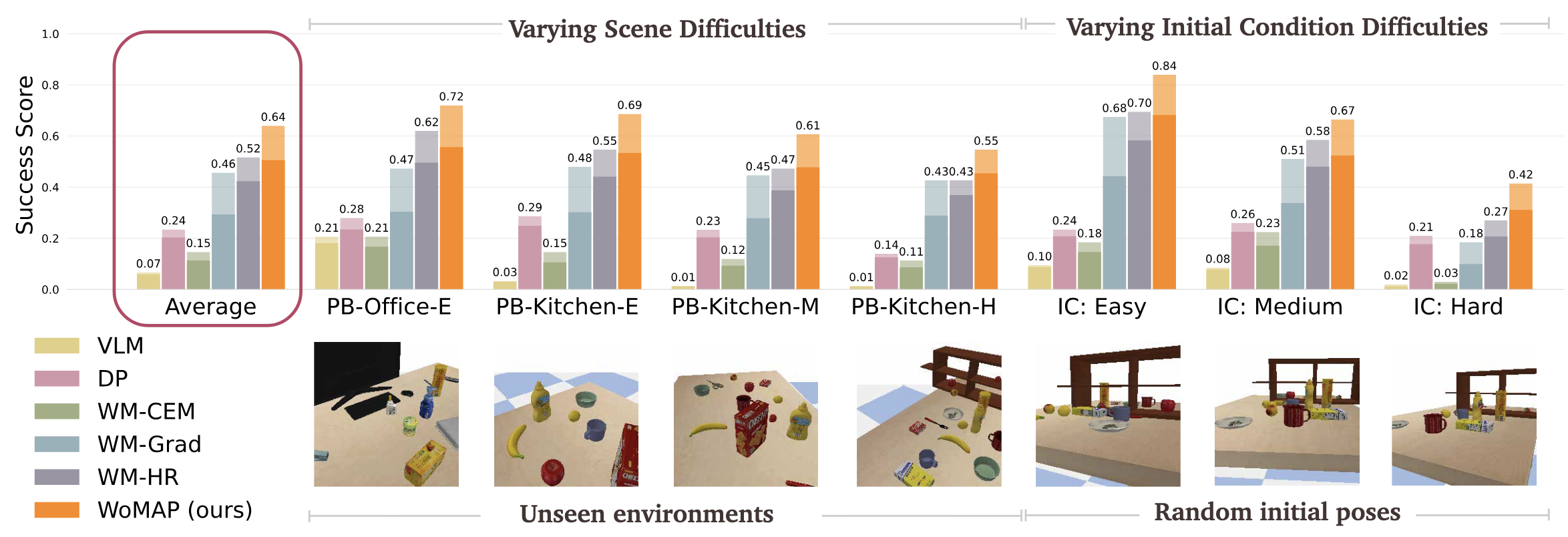

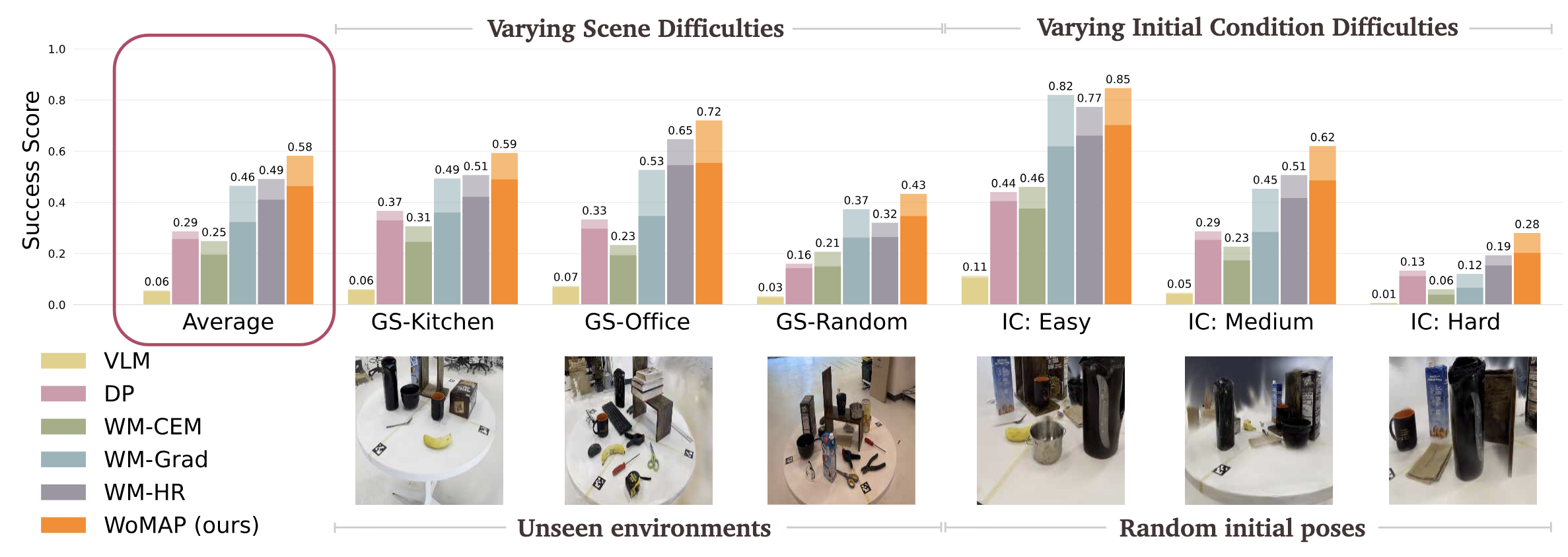

We benchmark WoMAP against a VLM-based planner (VLM), diffusion policy (DP), and three world-model-only planners: WM-CEM, WM-Grad, and WM-HR, in seven challenging PyBullet (PB) and Gaussian Splatting (GS) environments, where the robot must efficeintly locate the query object from an arbitrary initial view in an unseen scene configuration. In all tasks, WoMAP attains higher success rates and efficiency scores compared to all other methods. Even when only trained on Gaussian Splat simulation, WoMAP achieves strong sim-to-real transfer compared to baseline (see the paper for more details).

*Success rates and efficiency scores are represented by transparent and solid bars, respectively. Results are presented in the order of increasing difficulty and initial-pose conditions: easy (E), medium (M), and hard (H).

We evaluate WoMAP's sim-to-real transfer ability on 20 hardware trials for each of the 3 corresponding real-world tasks on the TidyBot. Despite trained entirely in Gaussan Splat simulation, WoMAP transfers effectively to the real world, maintaining nearly the same success rate and efficiency scores in simulation, with a worst-case performance drop of 23%.

We evaluate WoMAP in out-of-distribution lighting and background conditions with the model trained on nominal conditions in simulation, demonstrating the robustness of our dynamics and rewards prediction module, and the rich visual-semantic information encoded in the latent state.

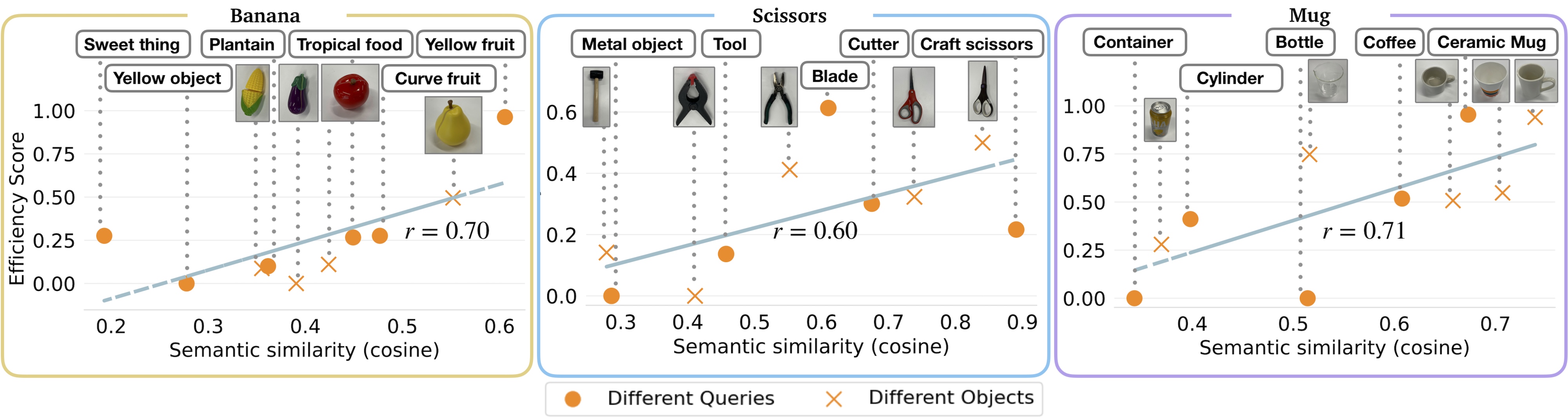

We evaluate WoMAP to unseen target objects and task instructions across two axes: (i) unseen language instructions for finding target objects that were seen during training, and (ii) unseen target objects with unseen language instructions. WoMAP achieves strong zero-shot semantic generalization, and we observe a positive correlation in semantic similarity (cosine distance) of the objects/queries with the most similar object present in our training objects.

@misc{yin2025womapworldmodel,

title={WoMAP: World Models For Embodied Open-Vocabulary Object Localization},

author={Tenny Yin and Zhiting Mei and Tao Sun and Lihan Zha and Emily Zhou and Jeremy Bao and Miyu Yamane and Ola Shorinwa and Anirudha Majumdar},

year={2025},

eprint={2506.01600},

archivePrefix={arXiv},

primaryClass={cs.RO},

url={https://arxiv.org/abs/2506.01600},

}